Описание

Better Robots.txt generates a virtual robots.txt for WordPress, enhancing your website’s SEO (indexation capabilities, Google ranking, etc.) and its loading performance. Our plugin is compatible with Yoast SEO, Rank Math, Google Merchant, WooCommerce, and directory-based network sites (MULTISITE) and features now (2023) exclusive Artificial Intelligence (OpenAI) optimization settings for greater performance.

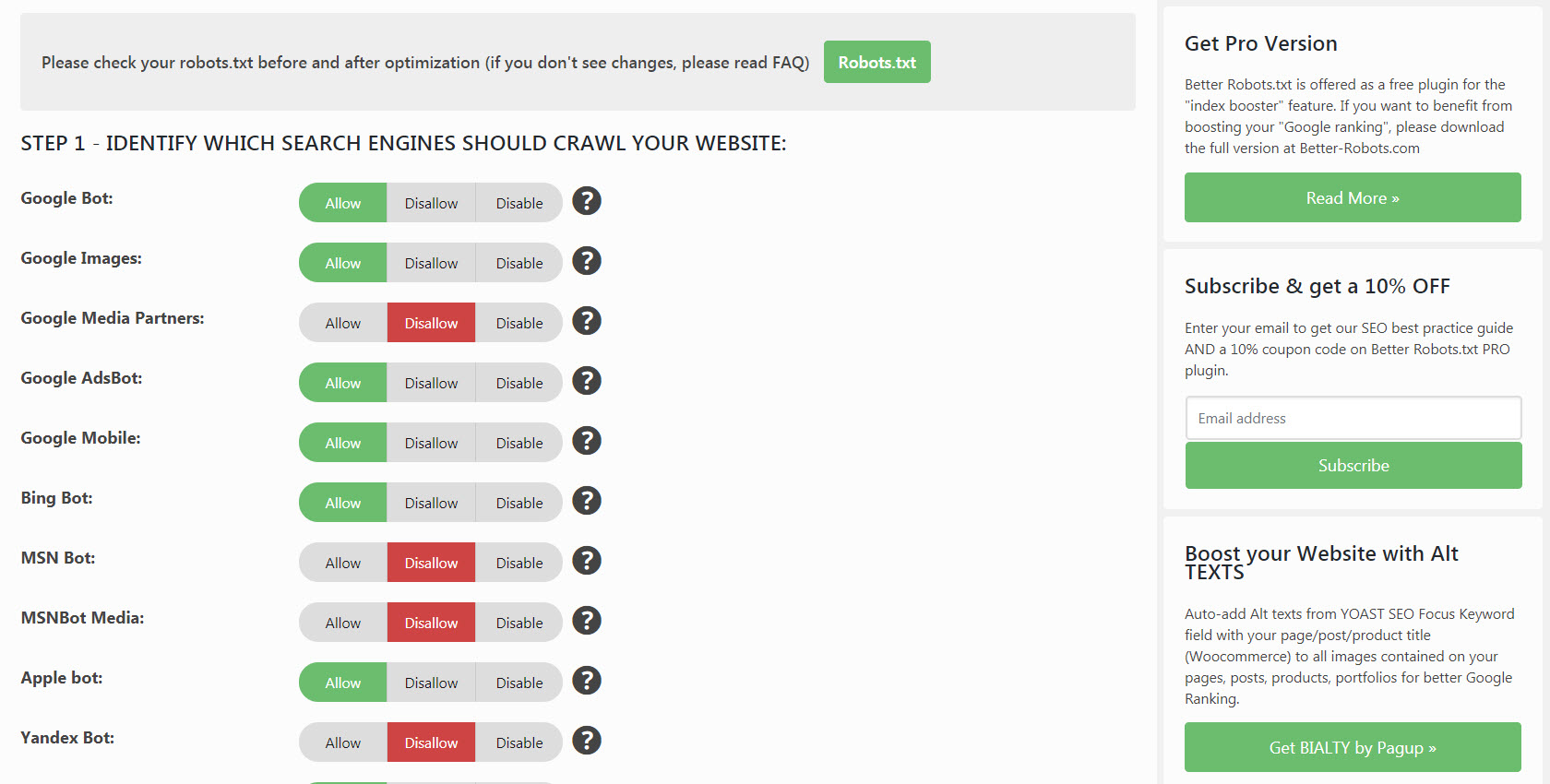

With Better Robots.txt, you can specify which search engines are permitted to crawl your website and provide clear directives about their allowed activities. You can also set a crawl-delay to shield your hosting server from aggressive scrapers. Better Robots.txt empowers you with complete control over your WordPress robots.txt content through the custom setting box.

Minimize your site’s ecological footprint and the production of greenhouse gas (CO2) associated with its online presence.

According to ChatGPT (OpenAI), the robots.txt produced by the PRO version of Better-Robots.txt is the most sophisticated and comprehensive available on the WEB for a WordPress site.

Краткий обзор:

ПОДДЕРЖИВАЕТСЯ НА 7 ЯЗЫКАХ

Better Robots.txt plugins are available and translated into the following languages: Chinese –汉语/漢語, English, French – Français, Russian –Руссɤɢɣ, Portuguese – Português, Spanish – Español, German – Deutsch.

Did you know that…

- The robots.txt file is a straightforward text file positioned on your web server that instructs web crawlers (like Googlebot) on whether they should access a file or not.

- The robots.txt file governs how search engine spiders perceive and engage with your web pages;

- This file and the bots it communicates with, are integral components of how search engines operate;

- The initial thing a search engine crawler examines when it visits a page is the robots.txt file;

The robots.txt is a reservoir of SEO potential that’s ready to be tapped into. Give Better Robots.txt a try!

О версии Pro (дополнительные функции):

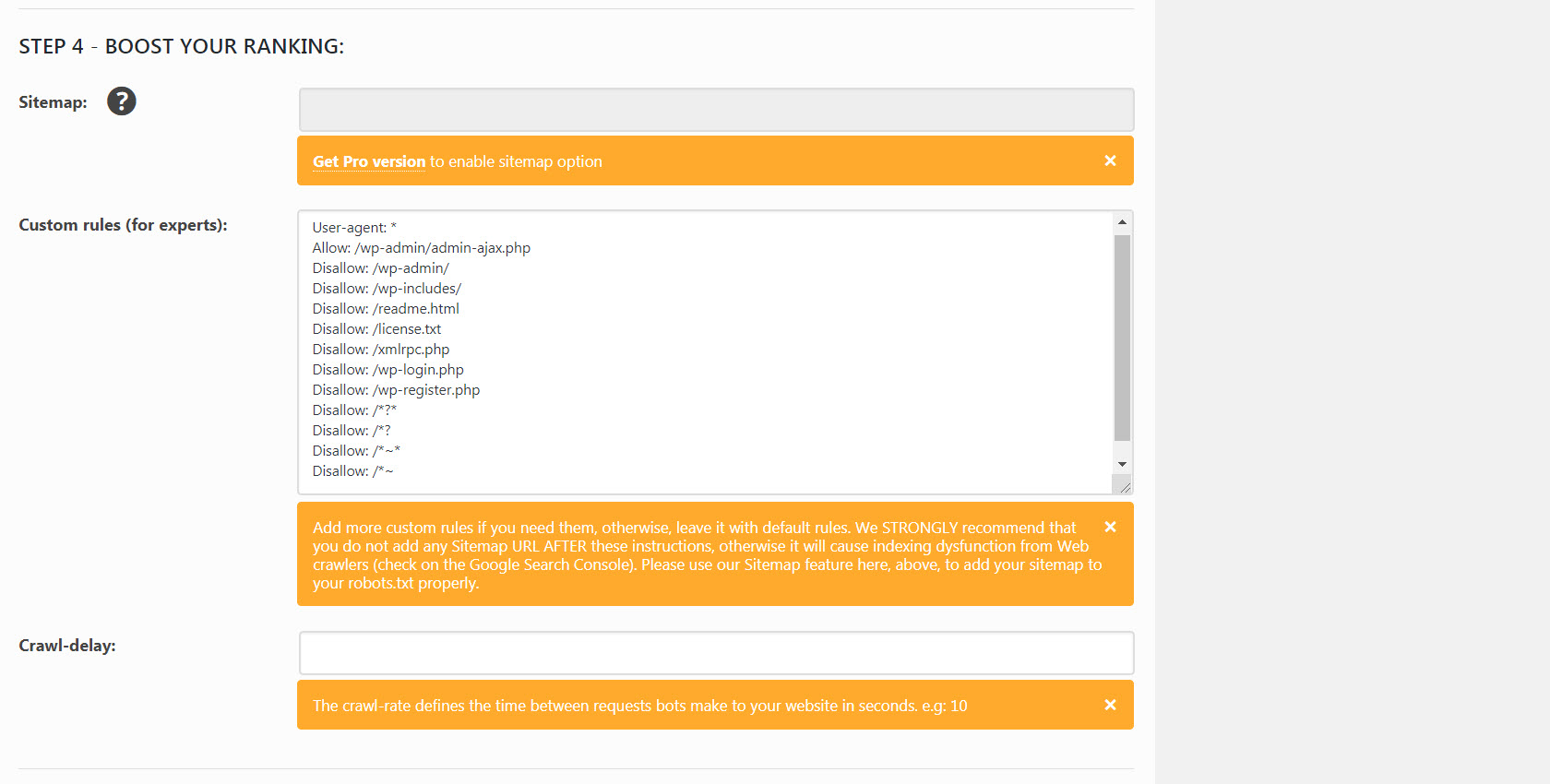

1. Enhance your content visibility on search engines with your sitemap!

Ensure your pages, articles, and products, even the most recent ones, are recognized by search engines!

The Better Robots.txt plugin is designed to integrate with the Yoast SEO plugin and Rank Math (arguably the best SEO Plugin for WordPress websites). It will automatically detect if you are using Yoast SEO / Rank Math and if the sitemap feature is enabled. If so, it will automatically add instructions to the Robots.txt file directing bots/crawlers to review your sitemap for recent changes on your website (allowing search engines to crawl any new content).

If you wish to add your own sitemap (or if you are using a different SEO plugin), you simply need to copy and paste your Sitemap URL, and Better Robots.txt will incorporate it into your WordPress Robots.txt.

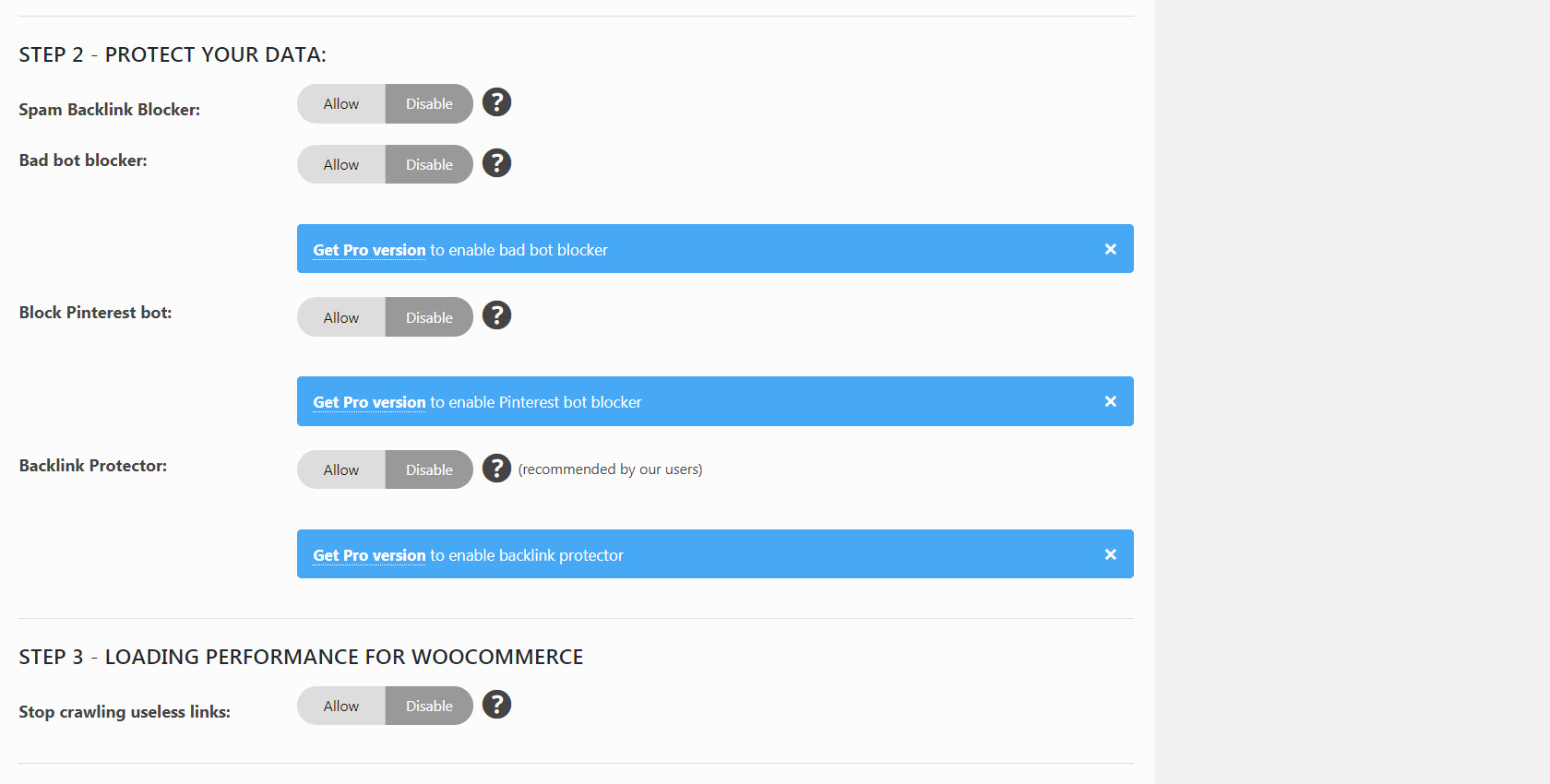

2. Safeguard your data and content

Prevent harmful bots from scraping your website and exploiting your data.

The Better Robots.txt plugin assists in blocking most common malicious bots from crawling and scraping your data.

There are both beneficial and harmful bots that crawl your site. Beneficial bots, like Google bot, crawl your site to index it for search engines. However, others crawl your site for more malicious reasons such as repurposing your content (text, price, etc.) for republishing, downloading entire archives of your site, or extracting your images. Some bots have even been reported to crash entire websites due to excessive bandwidth usage.

The Better Robots.txt plugin shields your website against spiders/scrapers identified as harmful bots by Distil Networks.

3. Conceal & safeguard your backlinks

Prevent competitors from discovering your profitable backlinks.

Backlinks, also known as «inbound links» or «incoming links,» are created when one website links to another. The link to an external website is called a backlink. Backlinks are particularly valuable for SEO as they signify a «vote of confidence» from one site to another. Essentially, backlinks to your website signal to search engines that others endorse your content.

If numerous sites link to the same webpage or website, search engines can deduce that the content is link-worthy, and therefore worth displaying on a SERP. Thus, earning these backlinks can positively impact a site’s ranking position or search visibility. In the SEM industry, it’s common for specialists to identify the sources of these backlinks (competitors) to select the best ones and generate high-quality backlinks for their own clients.

Given that creating highly profitable backlinks for a company is time-consuming (time + energy + budget), allowing your competitors to identify and replicate them so easily is a significant loss of efficiency.

Better Robots.txt aids in blocking all SEO crawlers (aHref, Majestic, Semrush) to keep your backlinks hidden.

4. Prevent Spam Backlinks

Bots that populate your website’s comment forms with messages like ‘great article,’ ‘love the info,’ ‘hope you can elaborate more on the topic soon’ or even personalized comments, including the author’s name, are widespread. Spambots are becoming increasingly sophisticated over time, and unfortunately, comment spam links can seriously damage your backlink profile.

Better Robots.txt assists in preventing these comments from being indexed by search engines.

5. Artificial Intelligence at the service of Robots.txt

In 2023, we added a robots.txt optimization feature based on OpenAI’s (ChatGPT) recommendations for any WordPress site. These settings maximize the efficiency of robots.txt for search engines to streamline the crawling of a website. More improvements are on the way…

In 2023, ChatGPT 4, after auditing several million robots.txt files available on the WEB, assessed that the robots.txt deployed by the PRO version of the Better-Robots.txt plugin was the most advanced, comprehensive, and sophisticated configuration currently available for WordPress environments. A point of pride for our team!

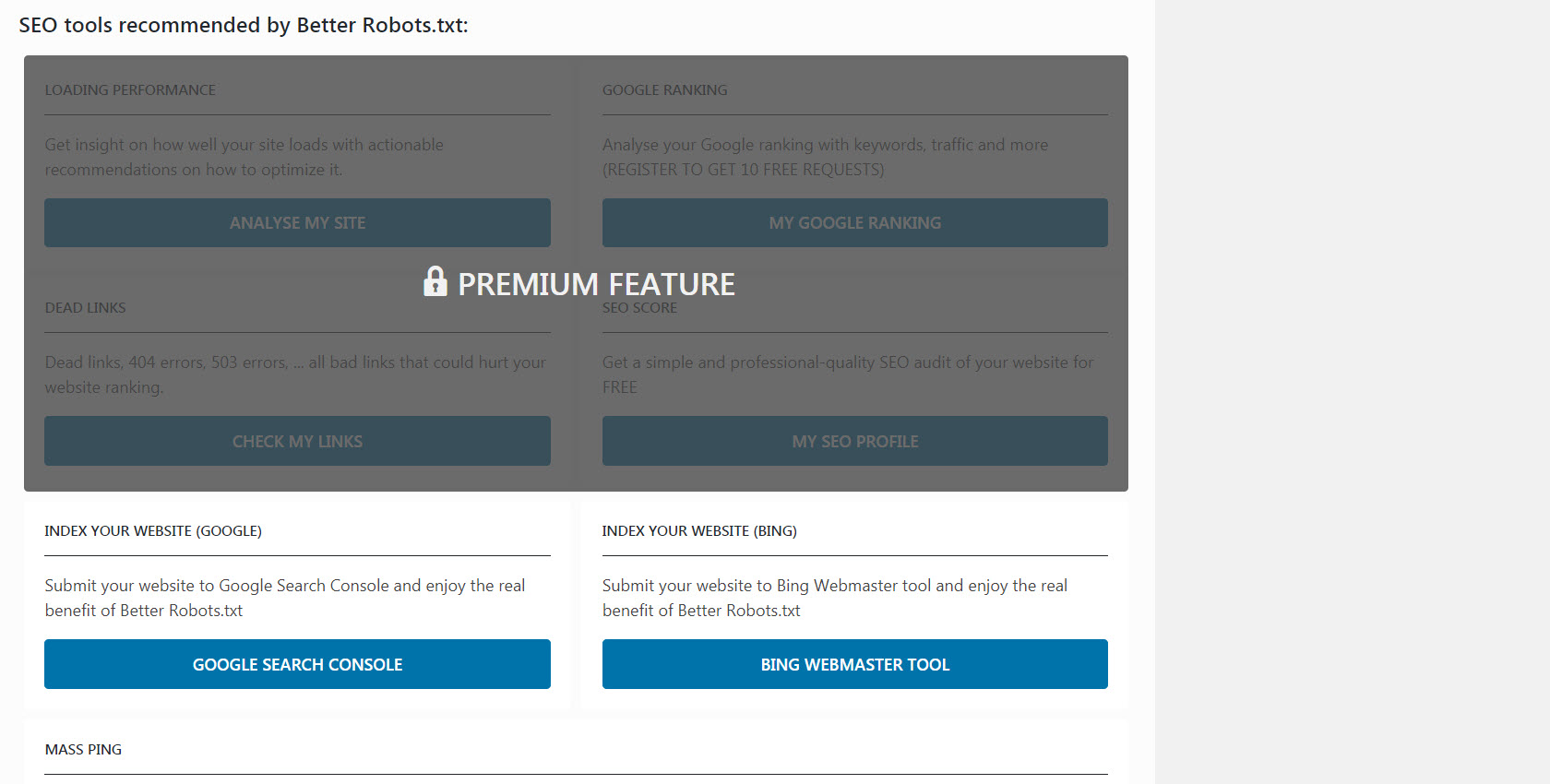

6. SEO Tools

In the process of enhancing our plugin, we’ve incorporated shortcut links to two crucial tools for those concerned about their search engine rankings: Google Search Console & Bing Webmaster Tool. If you’re not already utilizing them, you can now manage your website’s indexing while optimizing your robots.txt! We’ve also provided direct access to a Mass Ping tool, enabling you to ping your links on over 70 search engines.

Additionally, we’ve created four shortcut links to some of the best Online SEO Tools, directly accessible through Better Robots.txt SEO PRO. This means you can now check your site’s loading performance, analyze your SEO score, identify your current SERP rankings with keywords & traffic, and even scan your entire website for dead links (404, 503 errors, etc.) directly from the plugin.

7. Stand Out

We thought we could add a unique touch to Better Robots.txt by introducing a feature that lets you «customize» your WordPress robots.txt with your own distinctive «signature.» Many major companies worldwide have personalized their robots.txt by adding proverbs (https://www.yelp.com/robots.txt), slogans (https://www.youtube.com/robots.txt), or even drawings (https://store.nike.com/robots.txt – at the bottom). Why not do the same? That’s why we’ve dedicated a specific area on the settings page where you can write or draw anything you want (really) without affecting your robots.txt efficiency.

8. Prevent Crawling of Redundant WooCommerce Links

We’ve introduced a unique feature that blocks specific links («add-to-cart», «orderby», «filter», cart, account, checkout, etc.) from being crawled by search engines. Most of these links demand a significant amount of CPU, memory & bandwidth usage (on the hosting server) as they are not cacheable and/or create «infinite» crawling loops (while they are unnecessary).

By optimizing your WordPress robots.txt for WooCommerce when running an online store, you can allocate more processing power to the pages that truly matter and enhance your loading performance.

9. Dodge Crawler Traps

«Crawler traps» are structural issues within a website that lead crawlers to discover a virtually infinite number of irrelevant URLs. In theory, crawlers could get stuck in one part of a website and never finish crawling these irrelevant URLs.

Better Robots.txt aids in preventing crawler traps, which can harm crawl budget and result in duplicate content.

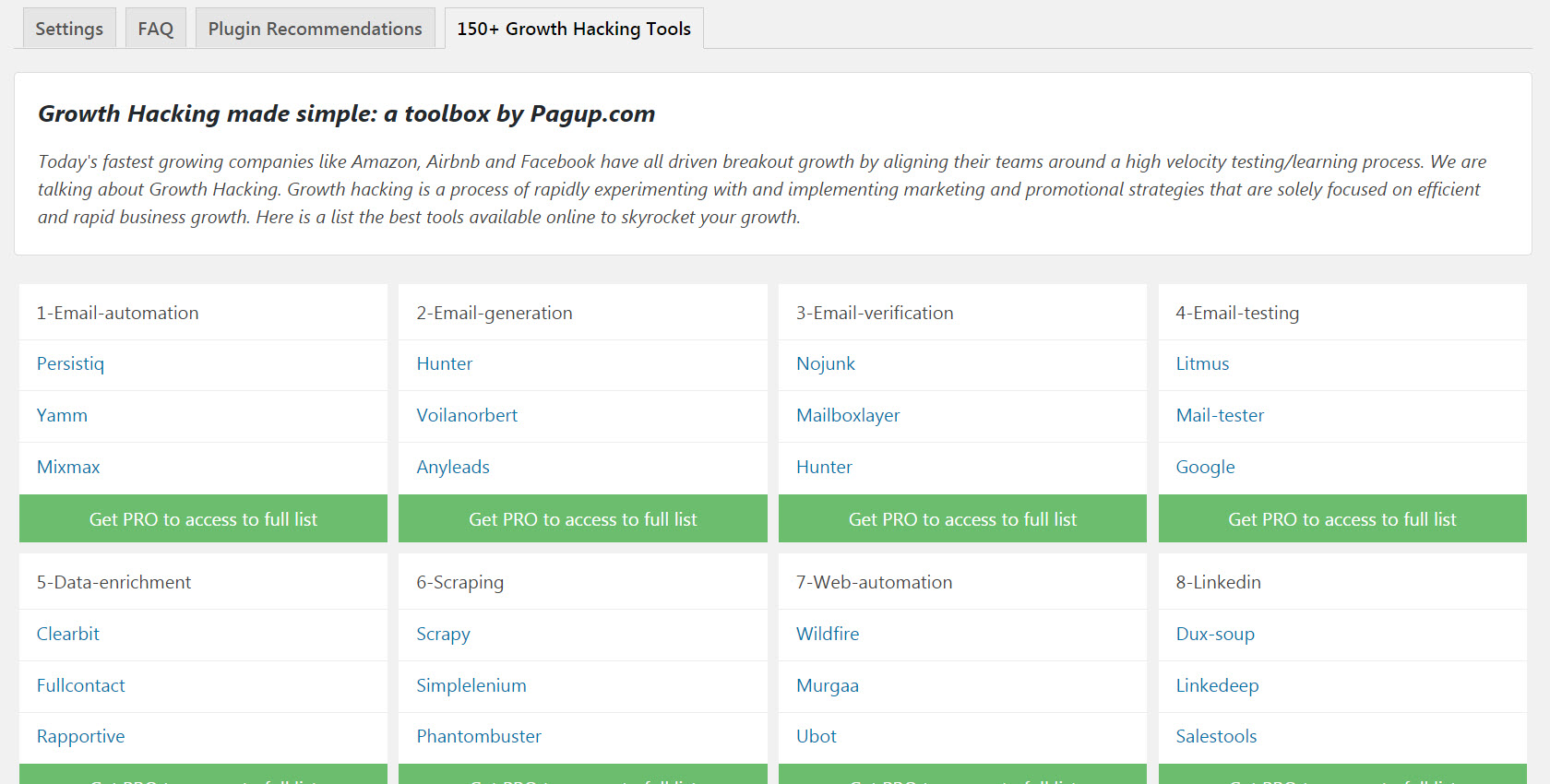

10. Growth Hacking Tools

Today’s fastest-growing companies, including Amazon, Airbnb, and Facebook, have all achieved breakout growth by aligning their teams around a high-velocity testing/learning process. This is referred to as Growth Hacking. Growth hacking is a process of rapidly experimenting with and implementing marketing and promotional strategies that are solely focused on efficient and rapid business growth. Better Robots.txt provides a list of over 150+ tools available online to propel your growth.

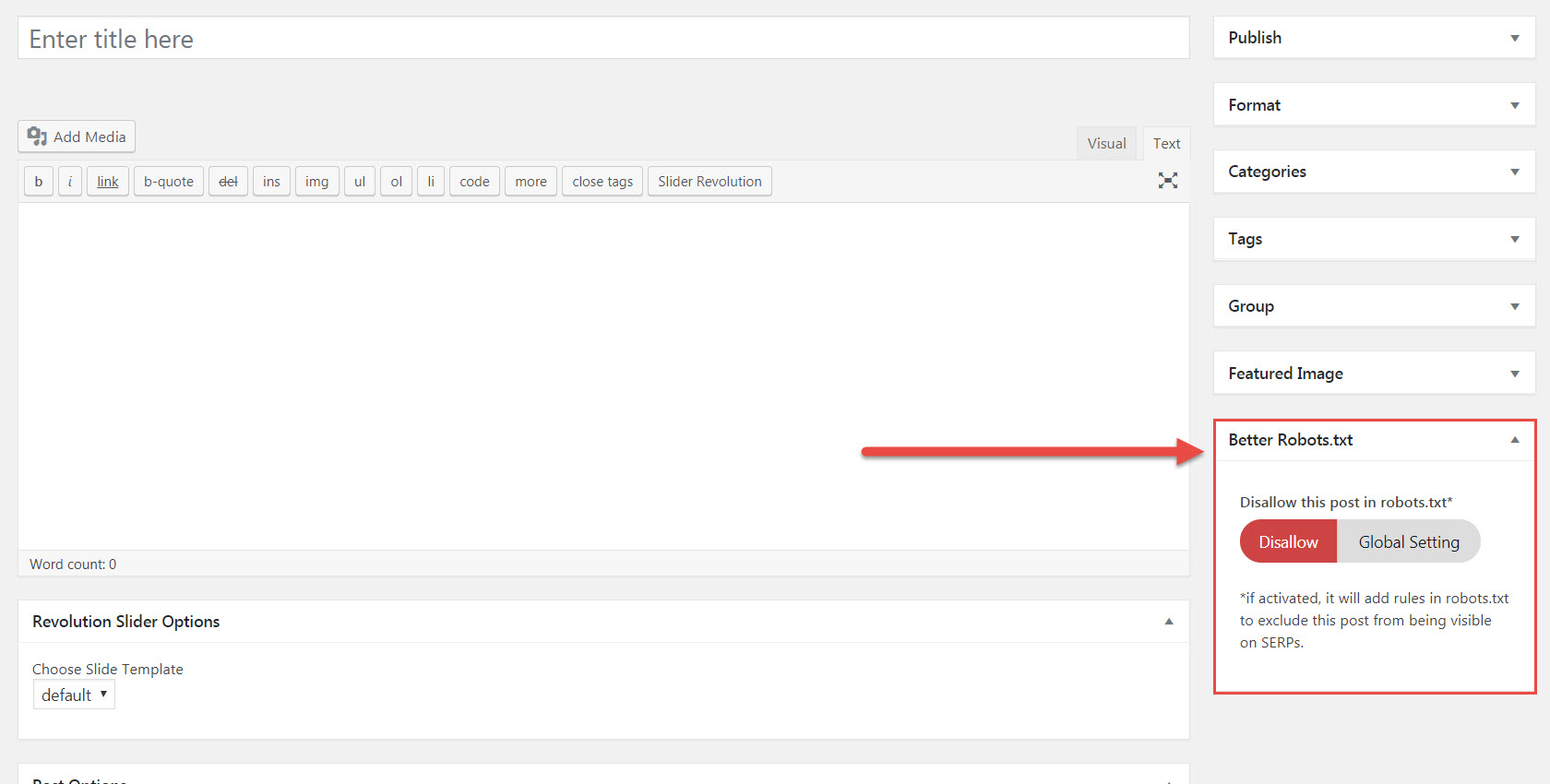

11. Robots.txt Post Meta Box for Manual Exclusions

This Post Meta Box allows you to manually set if a page should be visible (or not) on search engines by injecting a dedicated «disallow» + «noindex» rule inside your WordPress robots.txt. Why is this beneficial for your ranking on search engines? Simply because some pages are not meant to be crawled/indexed.

Thank you pages, landing pages, pages containing exclusively forms are useful for visitors but not for crawlers, and you don’t need them to be visible on search engines. Also, some pages containing dynamic calendars (for online booking) should NEVER be accessible to crawlers because they tend to trap them into infinite crawling loops which directly impacts your crawl budget (and your ranking).

12. Ads.txt & App-ads.txt Crawlability

To ensure that ads.txt & app-ads.txt can be crawled by search engines, the Better Robots.txt plugin ensures they are by default allowed in the Robots.txt file regardless of your configuration. For your information, Authorized Digital Sellers for Web, or ads.txt, is an IAB initiative to improve transparency in programmatic advertising.

You can create your own ads.txt files to identify who is authorized to sell your inventory. The files are publicly available and crawlable by exchanges, Supply-Side Platforms (SSP), and other buyers and third-party vendors. Authorized Sellers for Apps, or app-ads.txt, is an extension to the Authorized Digital Sellers standard. It expands compatibility to support ads shown in mobile apps.

More enhancements are always on the way…

Скриншоты

Отзывы

Участники и разработчики

«WordPress Robots.txt optimizer (+ XML Sitemap) – Boost SEO, Traffic & Rankings» — проект с открытым исходным кодом. В развитие плагина внесли свой вклад следующие участники:

Участники«WordPress Robots.txt optimizer (+ XML Sitemap) – Boost SEO, Traffic & Rankings» переведён на 3 языка. Благодарим переводчиков за их работу.

Заинтересованы в разработке?

Посмотрите код, проверьте SVN репозиторий, или подпишитесь на журнал разработки по RSS.

Журнал изменений

1.0.0

- Initial release.

1.0.1

- fixed plugin directory url issue

- some text improvements

1.0.2

- fixed some minor issues with styling

- improved text and translation

1.1.0

- added some major improvements

- allow/off option changed with allow/disallow/off

- improved overall text and french translation

1.1.1

- fixed a bug and improved code

1.1.2

- added new feature «Spam Backlink Blocker»

1.1.3

- fixed a bug

1.1.4

- added new «personalize your robots.txt» feature to add custom signature

- added recommended seo tools to improve search engine optimization

1.1.5

- added feature to detect physical robots.txt file and delete it if server permissions allows

1.1.6

- added russian and chinese (simplified) languages

- fixed bug causing redirection to better robots.txt settings page upon activating other plugins

1.1.7

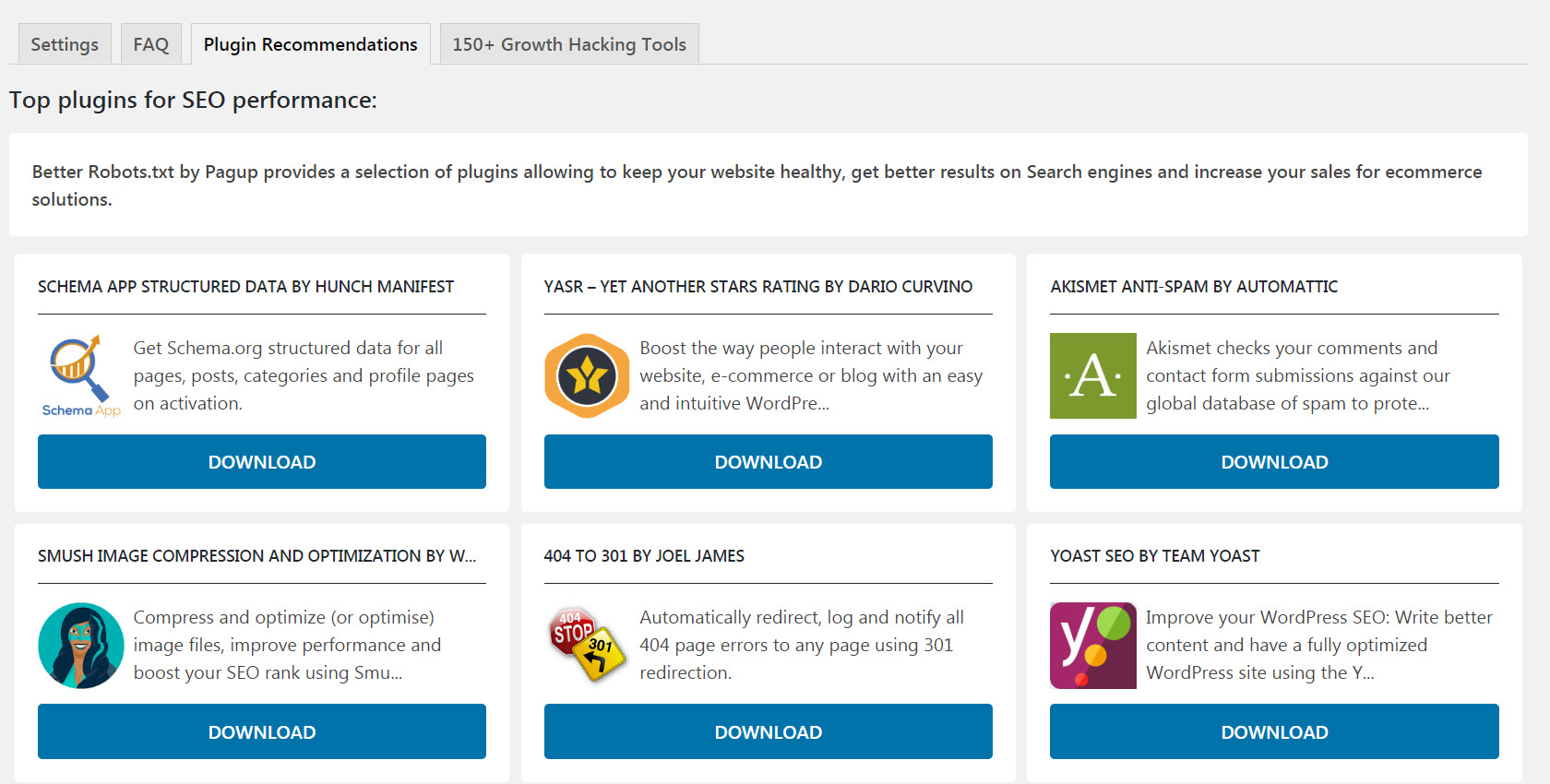

- added new feature: Top plugins for SEO performance

- fixed plugin notices issue to dismiss for define period of time after being closed

- fixed stylesheet issue to get proper updated file after plugin update (cache buster)

- added spanish and portuguese languages

1.1.8

- added new feature: xml sitemap detection

- fixed translations

1.1.9

- added new feature: loading performance for woocommerce

1.1.9.1

- fixed a bug in disallow rules for woocommerce

1.1.9.2

- boost your site with alt tags

1.1.9.3

- fixed readability issues

1.1.9.4

- fixed default robots.txt file issue upon plugin activation for first time

- fixed php error upon saving settings and permalinks

- refactored code

1.1.9.5

- added clean-param for yandex bot

- ask backlinks feature for pro users

- avoid crawler traps feature for pro users

- improved default robots.txt rules

1.1.9.6

- added 150+ growth hacking tools

- fixed layout bug

- updated default rules

1.2.0

- Added Post Meta Box to Disable Indivdual post, pages and products (woocommerce pro only). It will add Disallow and Noindex rule in robots.txt for any page you choose to disallow from post meta box options.

1.2.1

- Added multisite feature for directory based network sites (pro only). it can duplicate all default rules, yoast sitemap, woocommerce rules, bad bots, pinterest bot blocker, backlinks blocker etc with a single click for all directory based network sites.

- Added version timestamp for wp_register_script ‘assets/rt-script.js’

1.2.2

- Fixed some bugs creating error in google search console

- Text improvement

1.2.3

- Added «Hide your robots.txt from SERPs» feature

- Text improvements

1.2.4

- Fixed a bug

- Text improvements

1.2.5

- Fixed crawl-delay issue

- Updated translations

1.2.5.1

- Fixed a minor issue

1.2.6

- Security patched in freemius sdk

1.2.6.1

- Fixed Multisite Issue for pro users

1.2.6.2

- Fixed Yoast sitemap issue for Multisite users

1.2.6.3

- Fixed some text

1.2.7

- Added Baidu/Sogou/Soso/Youdao — Chinese search engines features for pro users

- Added social media crawl feature for pro users

1.2.8

- Notification will be disabled for 4 months. Fixed some other minor stuff

1.2.9.2

- Updated Freemius SDK v2.3.0

- BIGTA recommendation

1.2.9.3

- Fixed Undefined index error while saving MENUS for some sites

- Removed «noindex» rule for individual posts as Google will stop supprting it from Sep 01 2019

1.3.0

- Added 5 new rules to default config. Removed 4 old default rules which were cuasing some issues with WPML

- Added a search rule to Avoid crawling traps

- Added several new rules to Spam Backlink Blocker

- Fixed security issues

1.3.0.1

- VidSEO recommendation

1.3.0.2

- Fixed some security issues

- Added new rules to Backlink Protector (Pro only)

- Multisite notification will be disabled permenantly once dismissed

1.3.0.3

- Fixed php notice (in php log) for $host_url variable

1.3.0.4

- Fixed php notice (in php log) for $active_tab variable

- Fixed some typos

1.3.0.5

- Added option to Be part of our worldwide Movement against CoronaVirus (Covid-19)

- Fixed several php undefined index notices (in php log) related to Step 7 and 8 options

1.3.0.6

- 👌 IMPROVE: Updated freemius to latest version 2.3.2

- 🐛 FIX: Some minor issues

1.3.0.7

- 🔥 NEW: WP Google Street View promotion

- 🐛 FIX: Some minor text issues

1.3.1.0

- 👌 IMPROVE: Admin Notices are set to permenantly dismissed based on user.

- 👌 IMPROVE: Top level menu for Better Robots.txt Settings

- 🐛 FIX: Styling conflict with Norebro Theme.

- 🐛 FIX: Undefined variables php errors for some options

1.3.2.0

- 🐛 FIXED: XSS vulnerability.

- 🐛 FIX: Non-static method errors

- 👌 IMPROVE: Tested up to WordPress v5.5

1.3.2.1

- 🐛 FIXED: Call to undefined method error.

1.3.2.2

- 👌 IMPROVE: Update Freemius to v2.4.1

1.3.2.3

- 👌 IMPROVE: Tested up to WordPress v5.6

- 🐛 FIX: Get Pro URL

1.3.2.4

- 👌 IMPROVE: Added some more rules for Woocommerce performance

- 👌 IMPROVE: Update Freemius to v2.4.2

1.3.2.5

- 🔥 NEW: Meta Tags for SEO promotion

1.4.0

- 👌 IMPROVE: Refactored code to MVC

- 👌 IMPROVE: New clean design

- 👌 IMPROVE: Many small improvements

1.4.0.1

- 🐛 FIX: Added trailing backslash for using trait

1.4.1

- 🔥 NEW: Search engine visibility feature (Pro version)

- 🔥 NEW: Image Crawlability feature (Pro version)

1.4.1.1

- 🐛 FIX: Sitemap issue

1.4.2

- 🐛 FIX: Bugs and improvements

- 🔥 NEW: Option to add default WordPress Sitemap (Pro Version)

- 🔥 NEW: Option to add All in One SEO Sitemap (Pro Version)

1.4.3

- 🐛 FIX: Text issues

1.4.4

- 🐛 FIX: Security fix

1.4.5

- 🐛 FIX: PHP warning undefined index

1.4.6

- 🐛 FIX: SECURITY PATCH. Verify nonce for CSRF attack.

- 🐛 FIX: PHP 8.2 warning undefined index

1.4.7

- 🐛 FIX: Removed Be part of movement against CoronaVirus (Covid-19) option

1.5.0

- 🐛 FIX: Moved Moz bots from Bad bots list to Backlink Protector list.

- 🔥 NEW: You can select and exclude bots in Backlink Protector list (Pro Version)

- 🔥 NEW: Bad Bots — «AI recommended setting» by ChatGPT-4 (Pro Version)

- 🐛 FIX: Security fix

1.5.1

- 🔥 NEW: ChatGPT Bot Blocker — Block ChatGPT Bot from scrapping your content (Pro Version)

- 👌 IMPROVE: Encapsulation of Radio Switch Buttons and code refactoring.

1.5.2

- 🐛 FIX: Class initialization

2.0.0

- 🔥 NEW: UI/UX with better experience

- 🔥 NEW: ChatGPT Bot Blocker — Block ChatGPT Bot supported in free version

- 🔥 NEW: Ads.txt and App-ads.txt support for free version

- 🐛 FIX: Personalization line break issue

- 🐛 FIX: Other fixed and improvements